BibTeX

@inproceedings{

lin2023finegrained,

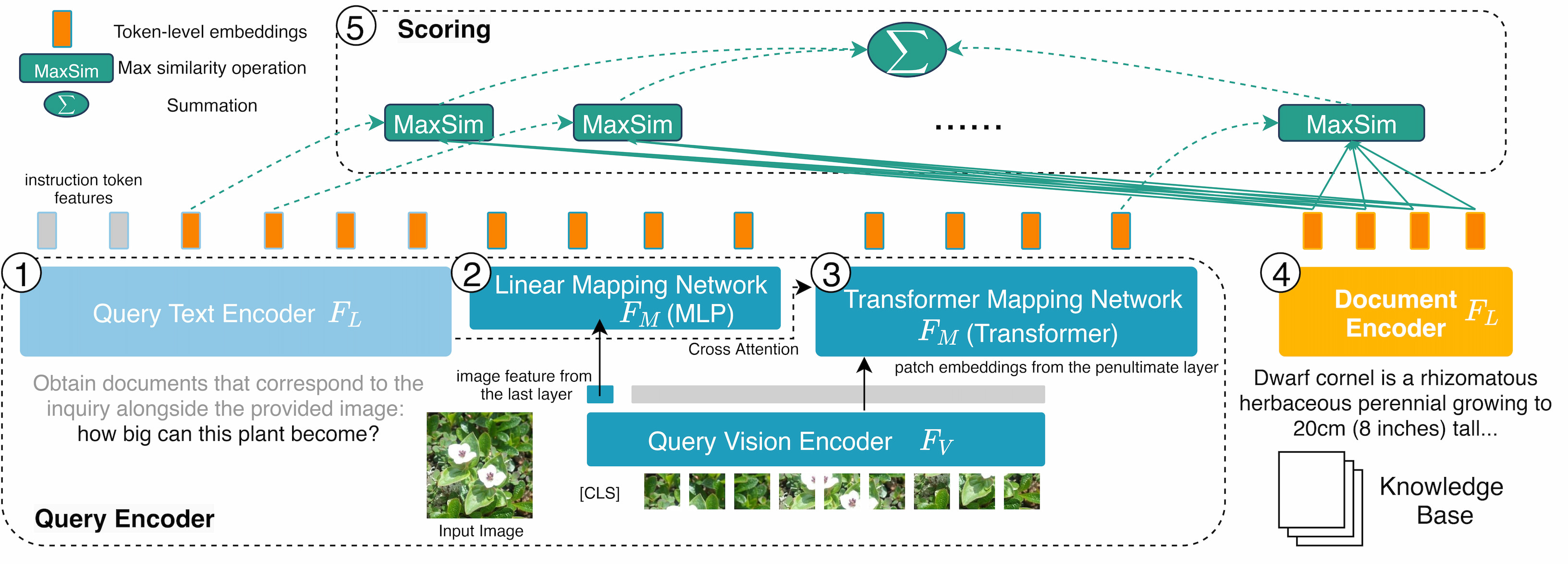

title={Fine-grained Late-interaction Multi-modal Retrieval for Retrieval Augmented Visual Question Answering},

author={Weizhe Lin and Jinghong Chen and Jingbiao Mei and Alexandru Coca and Bill Byrne},

booktitle={Thirty-seventh Conference on Neural Information Processing Systems},

year={2023},

url={https://openreview.net/forum?id=IWWWulAX7g}

}

@inproceedings{lin-etal-2024-preflmr,

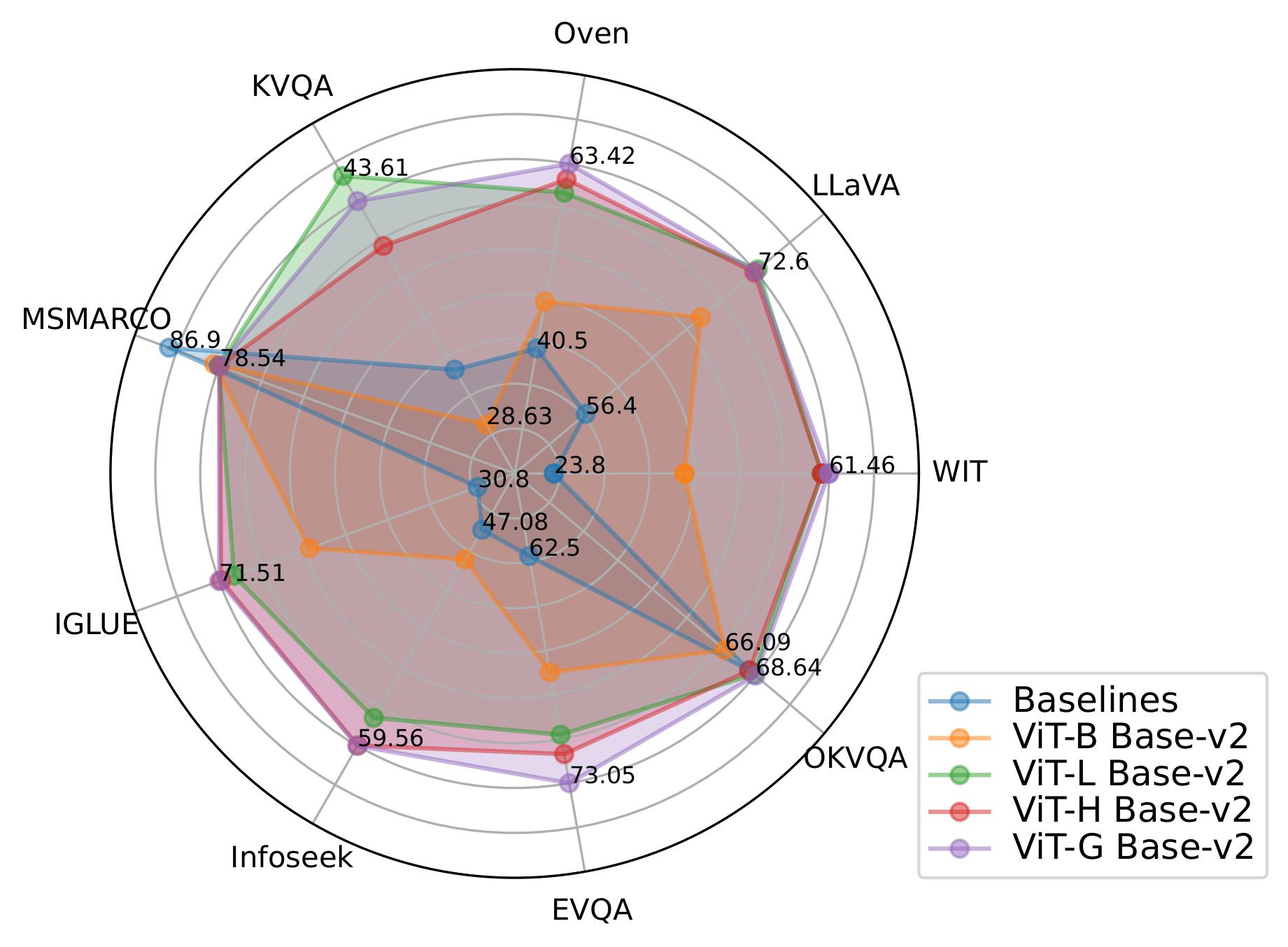

title = "{P}re{FLMR}: Scaling Up Fine-Grained Late-Interaction Multi-modal Retrievers",

author = "Lin, Weizhe and

Mei, Jingbiao and

Chen, Jinghong and

Byrne, Bill",

editor = "Ku, Lun-Wei and

Martins, Andre and

Srikumar, Vivek",

booktitle = "Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)",

month = aug,

year = "2024",

address = "Bangkok, Thailand",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2024.acl-long.289",

pages = "5294--5316",

abstract = "Large Multimodal Models (LMMs) excel in natural language and visual understanding but are challenged by exacting tasks such as Knowledge-based Visual Question Answering (KB-VQA) which involve the retrieval of relevant information from document collections to use in shaping answers to questions. We present an extensive training and evaluation framework, M2KR, for KB-VQA. M2KR contains a collection of vision and language tasks which we have incorporated into a single suite of benchmark tasks for training and evaluating general-purpose multi-modal retrievers. We use M2KR to develop PreFLMR, a pre-trained version of the recently developed Fine-grained Late-interaction Multi-modal Retriever (FLMR) approach to KB-VQA, and we report new state-of-the-art results across a range of tasks. We also present investigations into the scaling behaviors of PreFLMR intended to be useful in future developments in general-purpose multi-modal retrievers.",

}